Leveraging AI is incredibly useful when orchestrating and automating your most important workflows. And it’s essential that you have the right AI model for your organization to handle those workflows as expected. Today, we’re excited to announce that you can select your preferred AI model when using AI in Tines products and features.

Evolution of AI in Tines

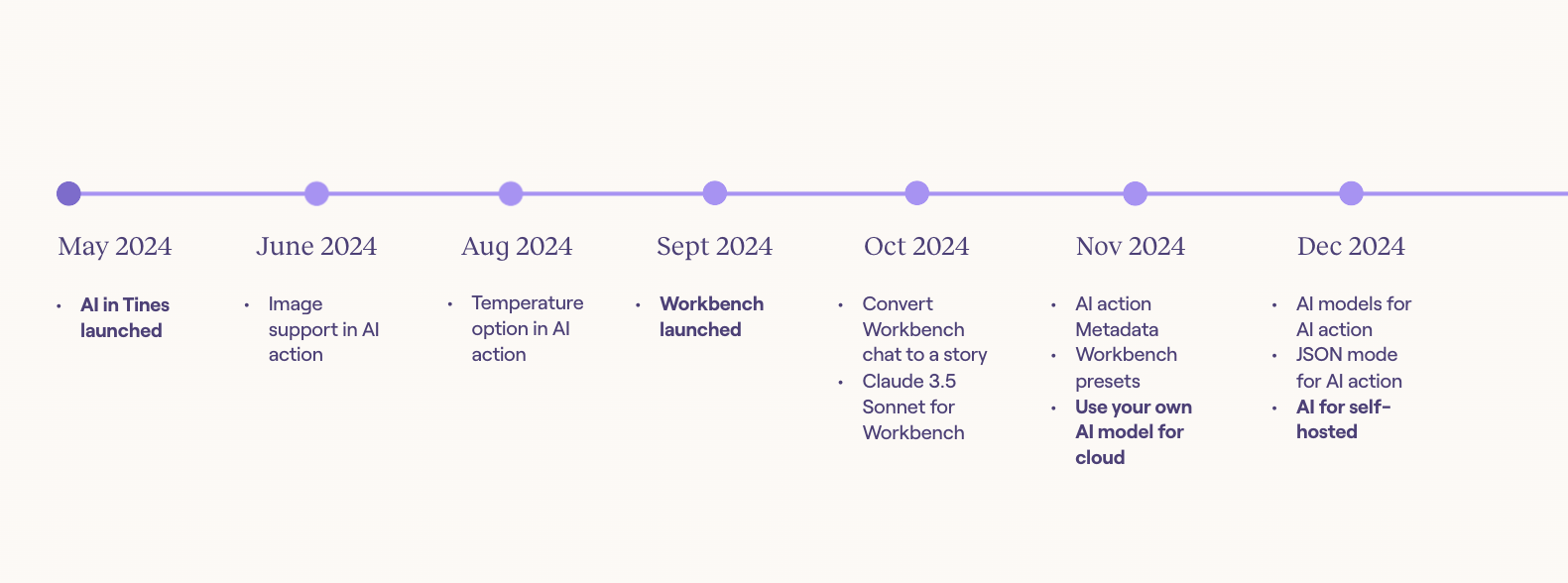

Since the introduction of AI in Tines in May 2024, we’ve continued to evolve the capabilities of our AI features and even introduced our Tines-powered chat interface Workbench. Our goal has been to reduce barriers to building, running, and monitoring your more important workflows through secure, private AI.

From allowing builders to control the temperature of AI responses to converting Workbench chats to a workflow, we’ve been working towards making AI even more tailored to your workflows. AI in Tines is secure and private by design, with its features powered by secure access to tenant-scoped LLMs (large language models). But, for a variety of reasons, some teams will still prefer to use a different LLM for their workflows. With this in mind, we’ve extended the supported providers to include your own preferred models.

Flexible AI

So why is having the choice so important? AI has been around for a while, and organizations are becoming more familiar with what type of LLM works best for their needs and desired outcomes. Using your trusted LLM can instill confidence in how your information is processed and your workflows run. Teams with an existing AI license and trusted provider can use your LLM within the Tines AI-powered features without disruption.

Similarly, AI in Tines is available wherever you deploy Tines, whether on our shared stack or in a self-hosted or hybrid environment.

💡Note

Available providers

By default, Tines AI features are powered by Anthropic's Claude and hosted securely through AWS Bedrock. If you have a preferred provider that follows OpenAI or Anthropic schemas, you can switch from the default to that preferred vendor.

Our docs list the available providers to configure. As with all features, this list will evolve. Keep an eye on What’s New for the latest updates.

Maintaining security with AI

Opting to use your own LLM provides flexibility, but also comes with new security responsibilities. As with any third-party application, you engage with the tool at your own risk. But you don’t have to jump in unprepared.

Matt Muller, Field CISO at Tines, shared some of his key recommendations for using AI with confidence, no matter how the underlying AI model is integrated. Matt’s recommendations include:

Ensure your model provider meets your data protection and privacy requirements

Use OWASP Top 10 for Web Applications to guide threat modeling

Use the strongest encryption algorithms supported by your provider

We recommend saving Matt’s blog for future reference!

We also created a handy guide for talking to your Governance, Risk, and Compliance (GRC) teams about AI. This will help you answer top questions, manage expectations, and share resources they may need to evaluate AI in Tines.

Getting started

Adding your own LLM model to Tines is as simple as entering an API key into the AI settings. For step-by-step visuals, read through the AI documentation.

Check out more resources on getting started with AI in Tines below: