As businesses of all sizes continue to embrace G Suite, security teams’ ability to detect and respond to malicious activity in G Suite tenants is more important than ever. Thankfully, Google provides admins with comprehensive reporting and logging which security teams can interrogate to detect suspicious behavior. However, moving these logs from G Suite to a centralized logging environment (e.g.: a SIEM) often requires a software engineering project. In this post, we’ll explore how Tines can be used to take logs from G Suite and forward them to ELK (Elasticsearch, Logstash, Kibana) for analysis and alerting.

Prerequisites

To follow along, you’ll need the following:

A Tines tenant (free Community Edition available here)

A G Suite admin account

An ELK stack with a Logstash HTTP Input configured to send logs to Elasticsearch

What logging is available in G Suite?

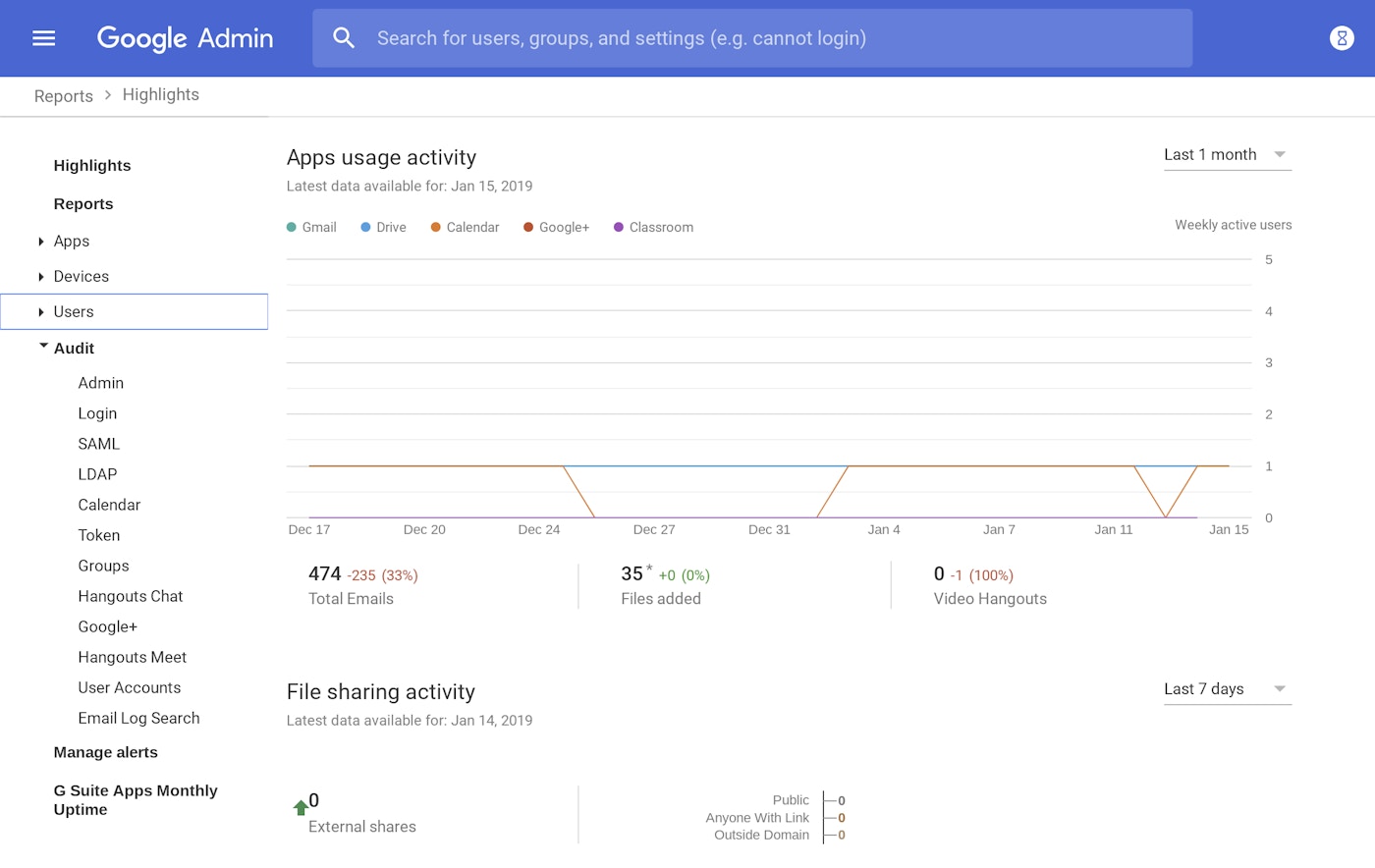

From the G Suite Reports portal, admins can view and search logs related to the following activity in a G Suite account.

Admin actions

Logins

SAML

LDAP

Calendar

Token

Groups

Hangouts Chat

Google+

Hangouts Meet

User Accounts

Email Log Search

G Suite report logs

Enabling the G Suite Admin SDK API

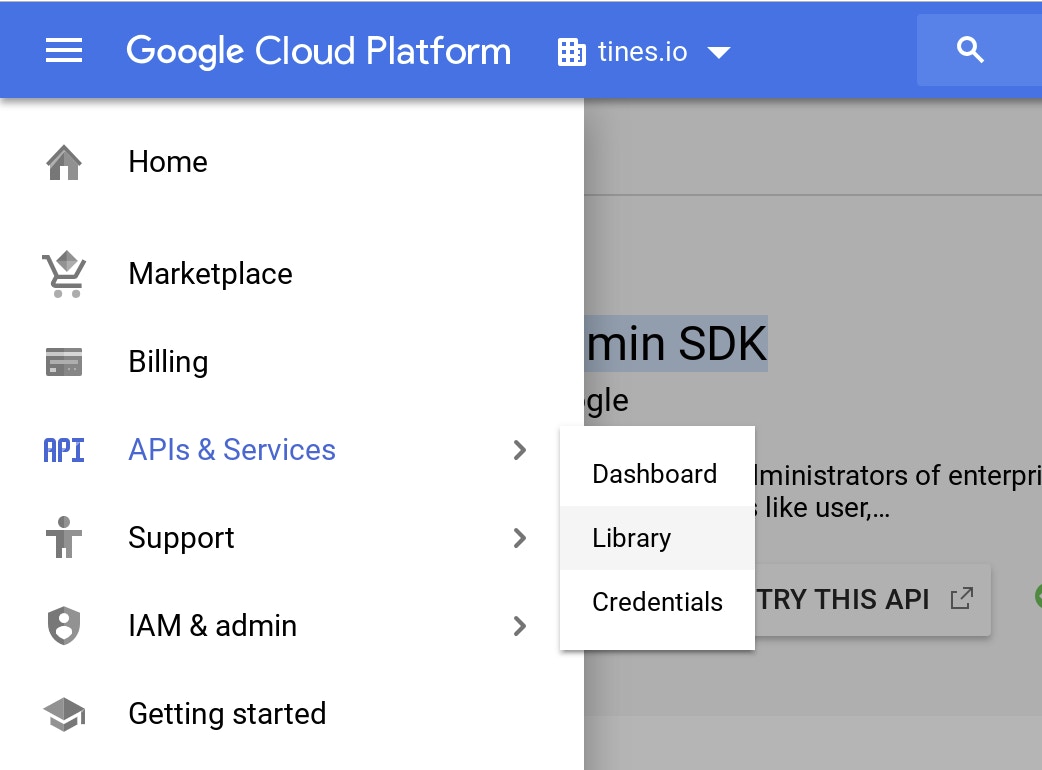

All the information available in the reports portal is also available via APIs in the G Suite Admin SDK. To begin, we need to enable the Admin SDK APIs. From console.cloud.google.com choose “APIs & Services”, “Library”.

APIs and Services

Next, search for “Admin SDK” and press “Enable”.

Creating a Service Account

In the top-left corner of the GCP console, click Menu.

Click IAM & Admin > Service accounts.

Click Create Service Account and in the Service account name field, enter a name for the service account.

(Optional) Enter a description of the service account.

Click Create.

(Optional) Assign the role of Project viewer to the new account.

Click Continue > Create Key.

Ensure the key type is set to JSON and click Create. You’ll see a message that the service account JSON file has been downloaded to your computer.

Make a note of the location and name of this file.

Click Close > Done.

Adding the service account to G Suite

Go to your G Suite domain’s Admin console.

Click Security.

Click Advanced settings.

From the Authentication section, click Manage API client access.

Open the JSON key file downloaded in step 8 above.

In the Client Name field, enter the Client ID for the service account. This can be taken from the JSON key file.

In the One or More API Scopes field, enter the list of scopes that your application should be granted access to. In our case, we need the following to access the reports API:

https://www.googleapis.com/auth/admin.reports.audit.readonly8. Click Authorize.

Creating the Tines credential

Finally, we’ll create a corresponding credential so Tines can authenticate to G Suite, we’ll use a JWT-type credential. For detailed instructions on how to use JWTs with G Suite, see here. The payload for the JWT will resemble the following:

{

"iss": "client_email_from_json_key_file@your-domain.com",

"sub": "admin@your-domain.com",

"scope": "https://www.googleapis.com/auth/admin.reports.audit.readonly",

"aud": "https://www.googleapis.com/oauth2/v4/token"

}Building the automation Story

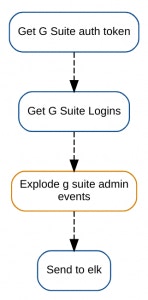

Now that we’ve laid the necessary groundwork, we can start to build the automation Story. To get started, we’ll use an HTTP Request Action to get an authentication token which we’ll use to fetch the logs. The options block for this Action is shown below:

{

"url": "https://www.googleapis.com/oauth2/v4/token",

"content_type": "form",

"method": "post",

"payload": {

"grant_type": "urn:ietf:params:oauth:grant-type:jwt-bearer",

"assertion": "{% credential G-Suite-Admin-Logs %}"

},

"expected_update_period_in_days": "1"

}With the authentication token emitted by this Action, we can now use it to fetch the report data. We’ll use an example of how to fetch Login Activity Reports. The options block for this HTTP Request Action is shown below. In this example, we’re fetching all logins since January 1st, 2019, of course, we could also use the now and minus liquid filters to fetch logs in the last 24 hours, 5 minutes, etc.

{

"url": "https://www.googleapis.com/admin/reports/v1/activity/users/all/applications/login",

"content_type": "json",

"method": "get",

"headers": {

"Authorization": "Bearer {{.get_g_suite_auth_token.body.access_token}}"

},

"expected_update_period_in_days": "1",

"payload": {

"startTime": "2019-01-01T00:00:00Z"

}

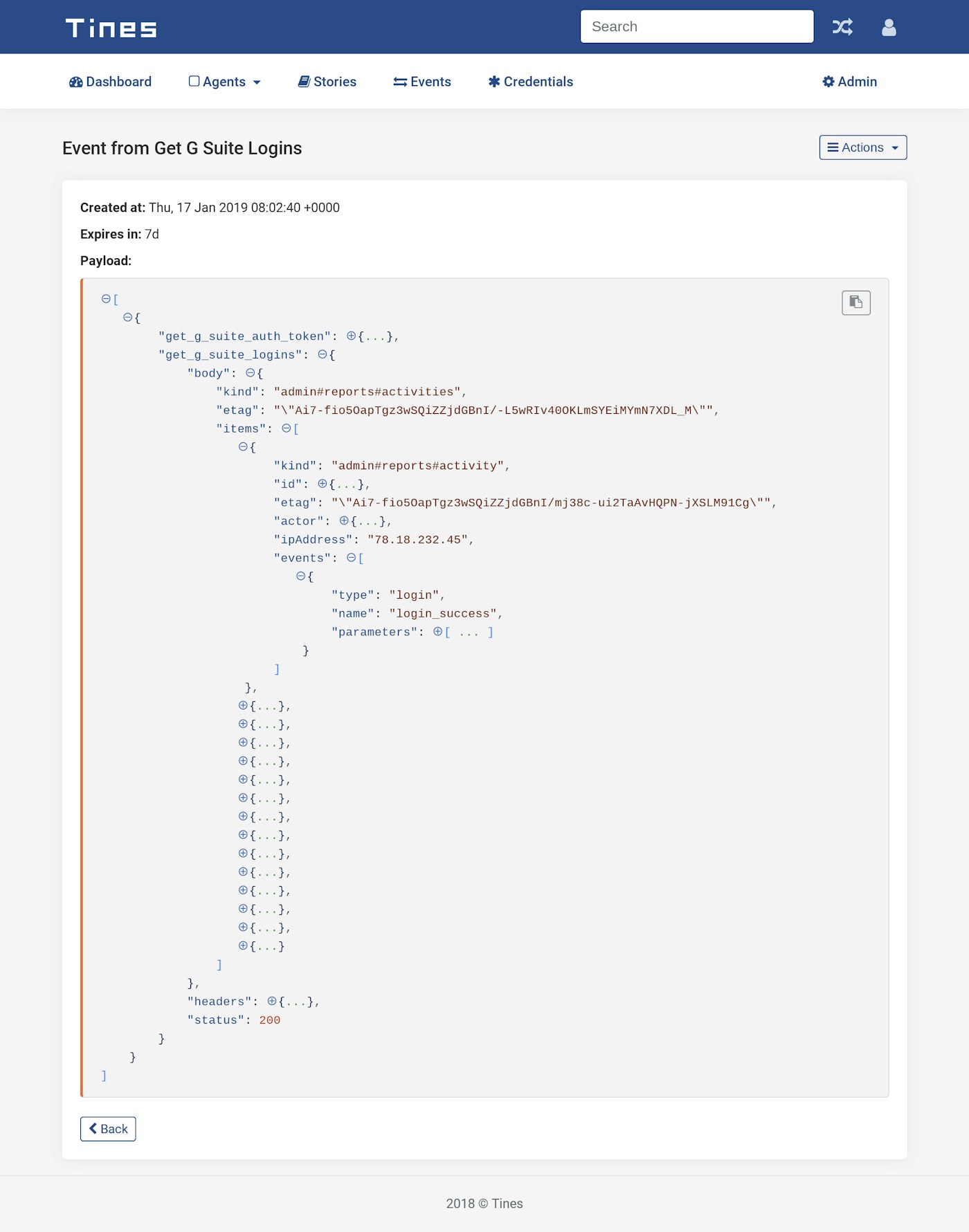

}An event emitted by the above Action is shown below. We can see the event contains an array called “items” which contains all the login occurrences in the G Suite tenant.

Sample G Suite Login Event from Tines

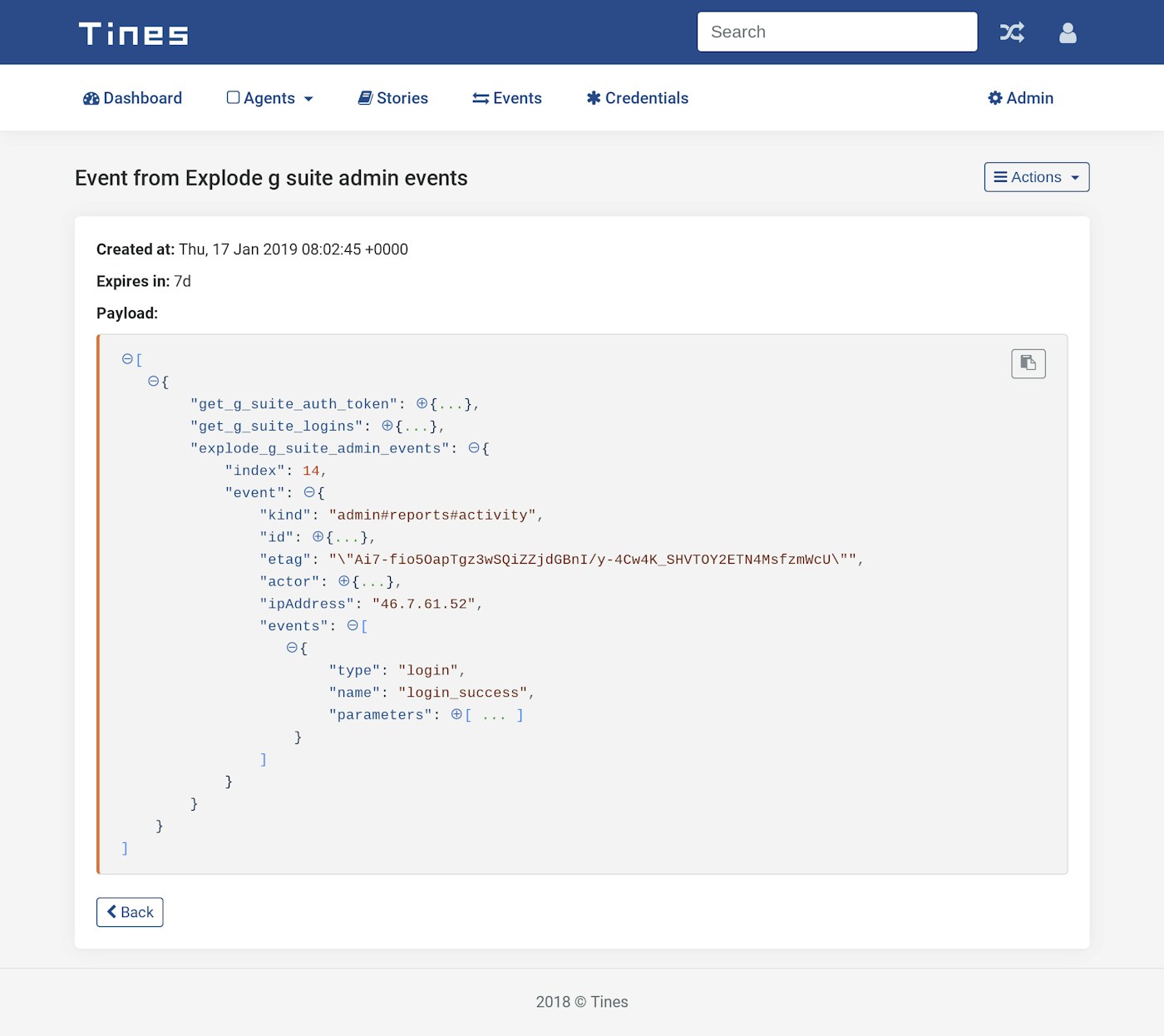

For ease of downstream analysis, we’d like every individual event to be an individual log in ELK. Therefore, before pushing the events to Logstash, we’ll use an Event Transformation Action in Explode mode to create individual events for every login. This explode Action’s options block should be similar to the following:

{

"mode": "explode",

"path": "{{.get_g_suite_logins.body.items}}",

"to": "event",

"expected_update_period_in_days": "1"

}The resultant event, emitted by the Event Transformation Action is shown below:

Sample event showing single login event from G Suite

Sending login events to Logstash and ELK

To send the login data to ELK, we’ll use Logstash’s HTTP Input plugin. The plugin creates a listener which will convert data it receives over HTTP(S) into log events, which can later be sent to a number of destinations, including, in our case, Elasticsearch. As a result, we can easily use the below HTTP Request Action to post login events to Logstash. In the example, below our Logstash HTTP plugin is listening at https://elk.tines.xyz on port 4999. Additionally, we use the “as_object” liquid filter to ensure that the formatting of the event is retained.

{

"url": "https://elk.tines.xyz:4999/gsuite/logins",

"content_type": "json",

"method": "post",

"expected_update_period_in_days": "1",

"payload": {

"events": "{{.explode_g_suite_admin_events.event.events | as_object }}",

"ipAddress": "{{.explode_g_suite_admin_events.event.ipAddress}}",

"actor": {

"profileid": "{{.explode_g_suite_admin_events.event.actor.profileId }}",

"email": "{{.explode_g_suite_admin_events.event.actor.email}}"

}

}

}Our finished automation Story will look like the following:

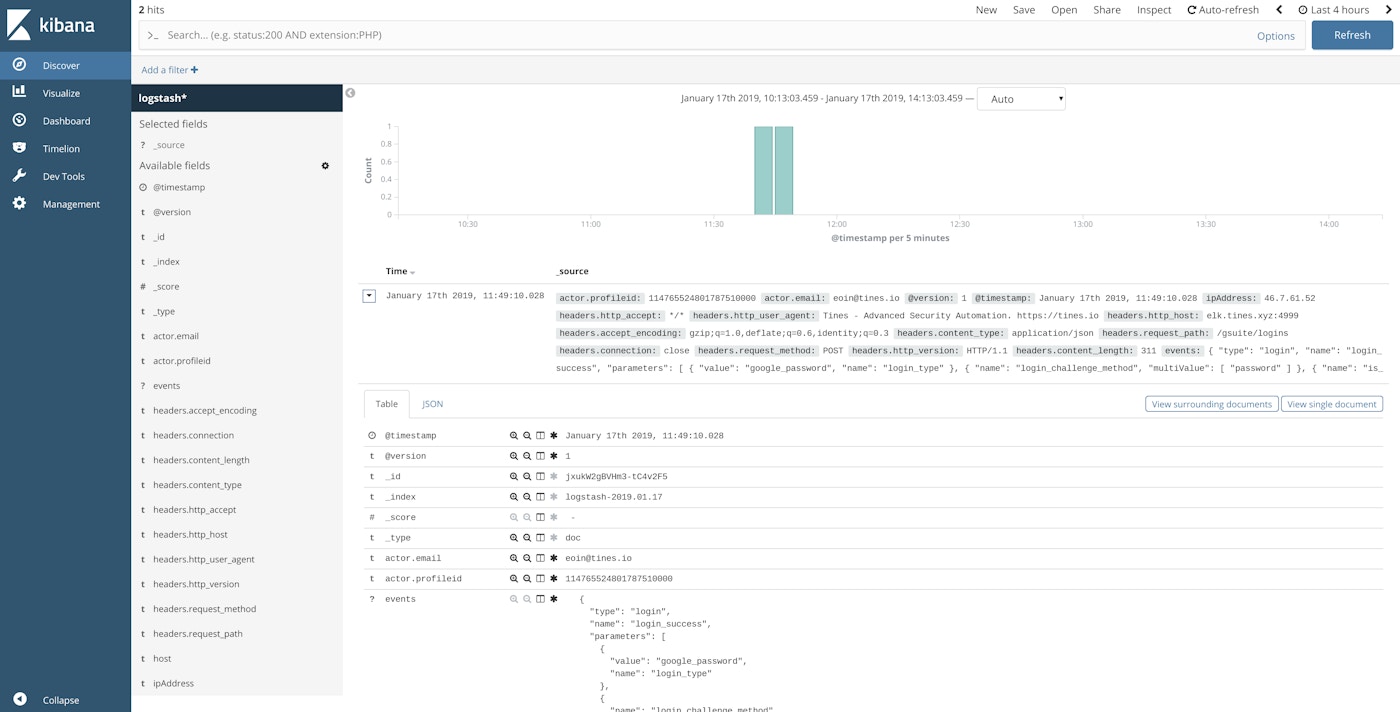

Searching G Suite report data in ELK

As a result of this automation, we can see how these events look in ELK by searching Kibana. Consequently, we can build alerts to trigger for suspicious behavior.

G Suite Logs in Kibana

Conclusion

The benefits of centrally storing G Suite events in ELK for indexing, searching, and alerting are obvious. The Tines advanced security automation platform provides security teams with a quick, reliable, and scalable mechanism to extract, transform and deliver this critical source of information. All, of course, without writing a single line of code.

*Please note we recently updated our terminology. Our "agents" are now known as "Actions," but some visuals might not reflect this.*