What is an ”AI agent”? Confusion abounds.

There is also some consensus: agents must of course be AI-driven systems. They should have some degree of autonomy, and they should be able to use tools in addition to understanding and reasoning.

But why isn't, say, ChatGPT an agent? According to most definitions out there, it actually is. Yet most (including OpenAI themselves) don’t describe it that way. In general, we seem to have a sense of “we’ll know it when we see it” with AI agents.

To guide our building efforts at Tines, we wanted a more precise definition. So we came up with our own objective criteria – a litmus test – for agents. It boils down to identity.

Learning from the law

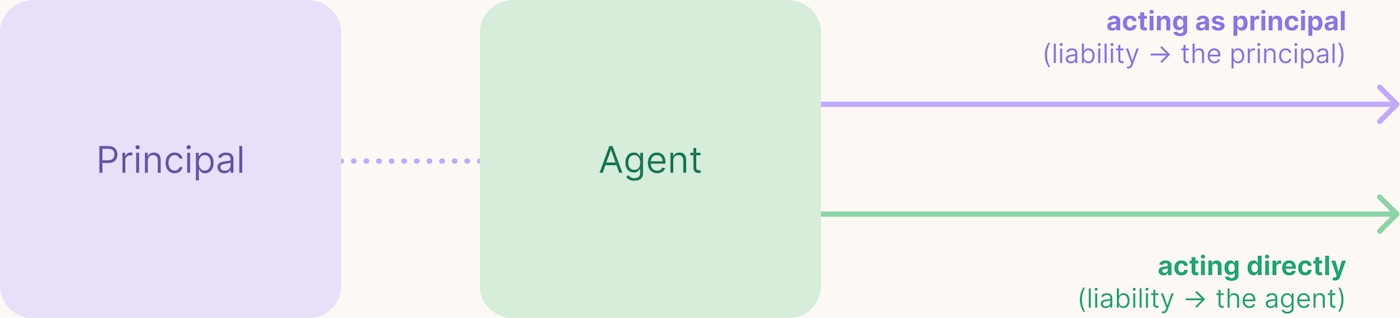

Agency is a longstanding concept in common law, where principals give authority to agents to act on their behalf. For example, a company could appoint a real estate broker to find an office. Or an individual could hire a travel agent to organize a vacation.

What happens when such an agent breaks the law? Are they, or the principal, to blame?

Principals can be held accountable (e.g. if they instruct the agent to do something illegal), but the agent makes its own decisions, too. So an agent may be the one prosecuted, when acting under their own identity.

In law, agents can act as their principal, but also independently as themselves.

Audit logs contain identity

In enterprise software systems, we have a clear definition of identity: the actor who is named in the audit log, for any action taken.

Our litmus test for AI agents:

Does the AI system perform actions under its own identity?

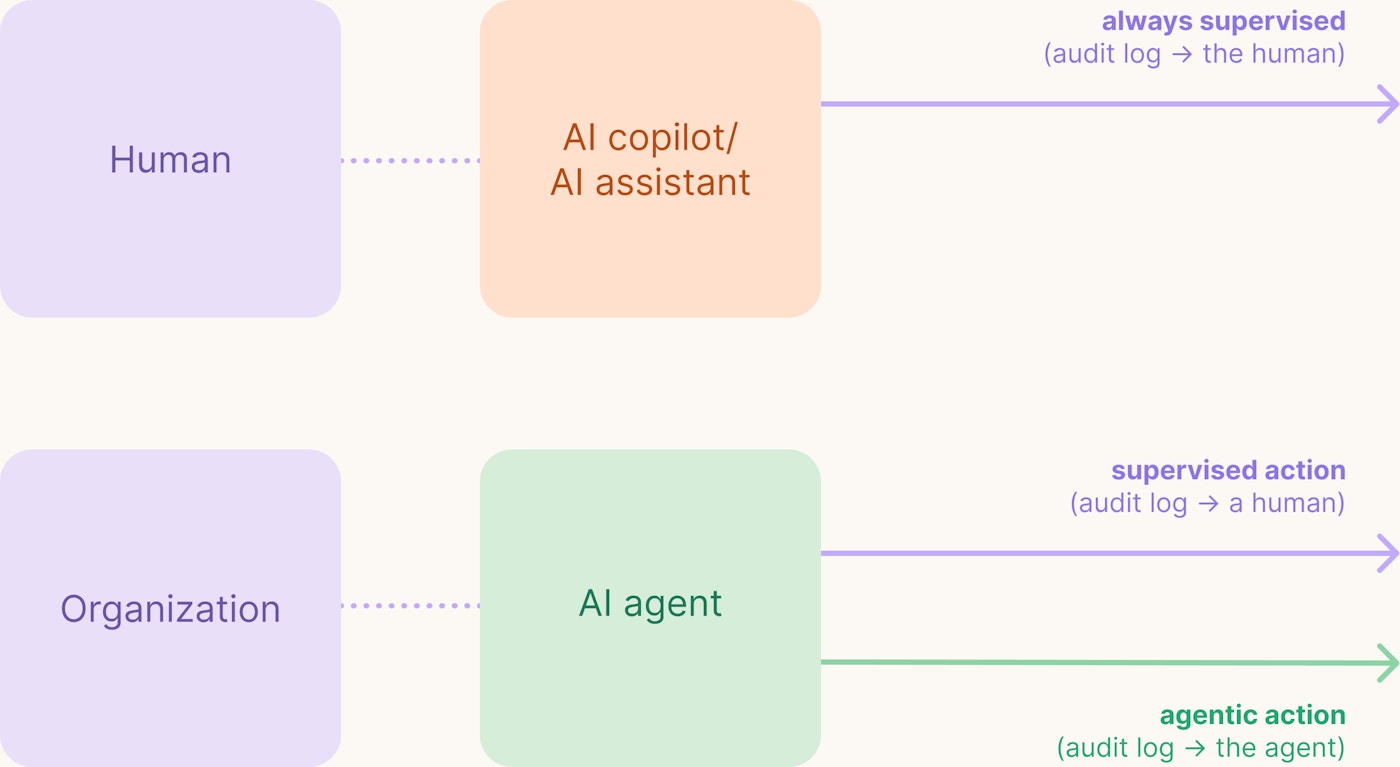

If it does, it’s an agent, and the audit logs will name the agent itself. And if it doesn’t – like most copilots or in-product assistants – it’s not.

Other properties of agents are revealed by passing this test. Identity implies autonomy, because the AI must use tools and perform actions independently. Identity requires capability and reasoning, because the AI must be capable of making good decisions to be entitled to bear such responsibility – an organization won’t trust it otherwise. And identity doesn't preclude an agent occasionally deferring to a human for input or supervision – agents in the real world do this, as do human employees with their colleagues and managers.

AI assistants are always supervised by a human. An AI agent can act independently on behalf of an organization. Audit logs are the litmus test.

Applying the litmus test

An AI agent is a system that can take independent actions under its own identity, rather than acting as an extension of a human user. This distinction – who or what is named in the audit log – defines whether a system is truly an agent, or simply an assistant.

By this definition, many great pieces of AI software are not agents, including our Workbench product, which is designed to operate within human control. And that's OK: we believe there will always be an important role for software that helps humans carry out their work more effectively.

But the promise of AI agents is something beyond this, and we're excited for that, too.