One of the beautiful things about APIs and multiple integrations is that you can combine them to deliver immense value quickly. With an orchestration and automation engine, a nexus forms and you can achieve truly complex tasks with an intelligent interface and simple logic. Bearing this in mind, we’re going to show you how to quickly upload text and attachments from emails to AWS S3 and then how to perform additional security and semantic analysis on them.

S3 (Simple Storage Service) was Amazon Web Services’ (AWS) first cloud offering in 2006 and to this day, it’s pivotal in most of their services and operations. We will use one of Amazon’s oldest and newest services to build a simple workflow e.g. AWS S3 and AWS Comprehend which is one of Amazon’s consumable AWS AI (Artificial Intelligence) offerings. We will also use Hybrid Analysis on files (which leverages CrowdStrike’s Falcon Sandbox) for additional enrichment and analysis.

Simplexity Without Leaky Abstractions

Simple operations, when joined together, have a habit of complicating themselves but it needn’t be so. Abstraction is a way of hiding complexity while simplifying engagement. Oversimplification can result in a loss of detail but not when you retain the capability to go deeper when needed. So, in the following Story, we will focus on one main analysis thread while also leveraging AI on the side for longer-term, deeper semantic analysis. We will also highlight how to modularize workflows using the STS (Send To Story) feature as an egress point from our main Story.

We begin by checking a standard phishing/abuse-mailbox that most organizations use for employees or customers to forward dubious messages and attachments to. Security teams normally analyze these emails and take the required actions including reporting back to the submitter but we will automate this process. And rather than dispense with the data, we will retain these emails and files to help improve our overall security posture. This retention will help us build a broader picture of what types of scams and exploits are being crafted to target our specific organization and teams. Previous blogs have covered URL extraction and analysis, so for this Story, we will start by sending a phishing email from the KnowBe4 phishing security test that targets us with a COVID-related message (and we will add an extra attachment). The email goes to the phishing report mailbox will come originally from “noreply@world-health.org” and contains:

Dear Sir/Madam,

Since there have been documented cases of the coronavirus in your area, the World Health Organization has prepared a document that includes all of the necessary precautions for you to take against the coronavirus infection.

We strongly recommend that you read the document attached to this message.

With best regards,

Dr. Darlene Campbell (World Health Organization - United States)

Getting Data into S3 Buckets

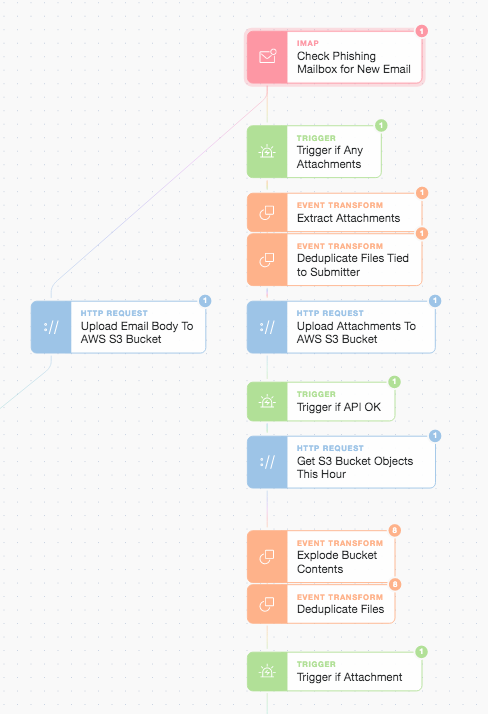

We begin by checking the abuse/phishing mailbox and extracting the required data. We have simple access to all portions of the message including any attachments and their hashes.

Main Story with AWS S3 PUTs and GETs

When we upload the attachment to AWS S3 we prefix our object file name with the email Message-ID (we also base64url_encode the Message-ID to get over some AWS S3 special character constraints!). By using the email’s Message-ID as part of the key for the S3 objects we can tie multiple attachments and analyses back to a specific message. We also use the date and hour in the S3 object pathname to make it trivial (and cheaper) to do time-based object retrieval (rather than using the “LastModified” field). This is how the “Get S3 Bucket Objects This Hour” step works above. We then deduplicate subsequent file handling based upon the SHA-265 hash of the file and the submitter’s email address. We also only continue onwards with objects in the bucket that are attachments.

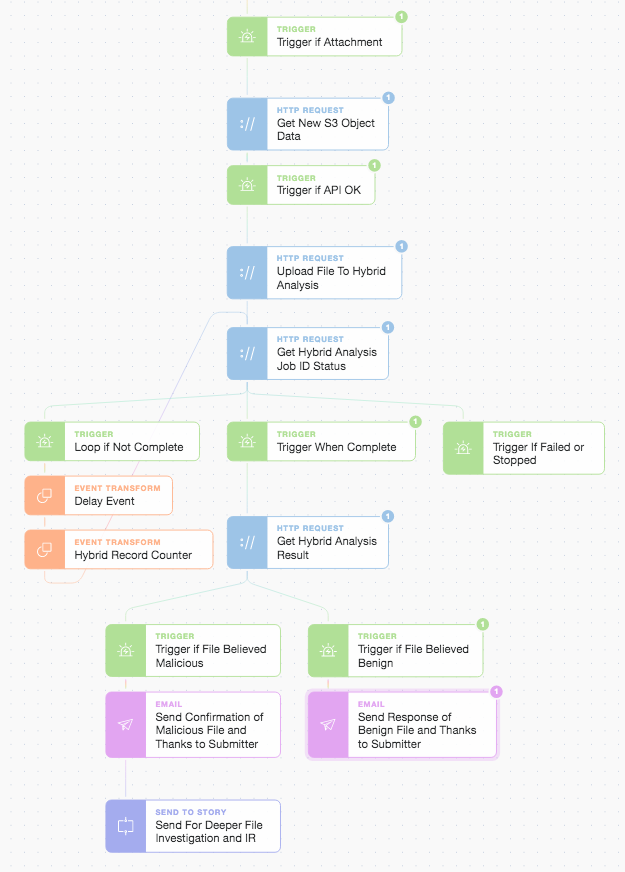

Hybrid Analysis, Response, and STS (Send To Story) Deeper Investigation

After getting the object we upload the file to Hybrid Analysis and wait for the remote analysis job to complete (in a simple delay loop) and then take action based upon the received threat score. Hybrid Analysis performs multiple checks and has a host of additional metrics and classifications that we could make decisions around what to do next. Irrespective of the analysis outcome, we close the loop with the submitter and thank them for their continued vigilance including confirming whether or not the file they sent actually is benign or malicious. If malicious we explain that it is going on further for deeper investigation and possible Incident Response (IR). This is actioned via another modular Story which could be anything from opening an issue in Jira Service Desk (with pre-populated ticket details and clickable next steps) to parallel automatic submission of the file to a wider array of anti-malware engines. It all depends on how you want to model and automate your workflow, playbook, or SOP (Standard Operating Procedure) as you can easily perform a whole host of actions either linearly or in parallel.

Simple and Real AI (Artificial Intelligence)

While the main central file storage and analysis workflow was executing we began to perform NLP (Natural Language Processing) on the subject and body of the email to extract entities from their text. Dependent upon the size of the email body we request either a synchronous or asynchronous analysis job from AWS Comprehend.

AWS Comprehend Entity Detection and STS (Send To Story) Data Mining

AWS Comprehend can extract entities such as “COMMERCIAL_ITEM”, “DATE”, ”EVENT”, ”LOCATION”, “ORGANIZATION”, ”OTHER”, ”PERSON”, “QUANTITY”, or ”TITLE” as per the below example response. This enables us to collect and record these sorts of entities from hundreds if not thousands of emails to use in data mining, analytics, or reporting. We can understand how the organization is being targeted and how we could perhaps better spend our security awareness and training budget by focusing on either frequent or infrequent phishing topics and ransomware campaigns.

This automation approach is infinitely more scalable than a manual analyst approach and can evolve in tandem with your platforms and organization. There are many additional services that could be readily and easily integrated into the above Story including as many combinations or permutations as your team needs. What else would you or your team add to the above Story?

*Please note we recently updated our terminology. Our "agents" are now known as "Actions," but some visuals might not reflect this.*