In a recent webinar on SOAR’s evolving role in security and beyond, I chatted with Andrew Green, Networking & Security Research Analyst at GigaOm.

We kicked things off with a brief discussion on Gartner’s Hype Cycle for ITSM report, which described the SOAR category as “obsolete” and prompted some commenters to declare that SOAR is “dead”.

Our take: SOAR will always be important, but traditional SOAR platforms are failing to keep pace with evolving modern security challenges. To stay relevant and useful, these platforms must adapt.

Read on to see what Andrew had to say about:

the limitations and advantages of traditional/legacy SOAR tools

the best use cases of AI in SOAR

current limitations of AI and what the future holds

accountability and decision-making in AI

Exploring SOAR’s limitations and strengths - then and now

Matt: There's been a lot of conversation lately about SOAR as a technology category. A lot of this discussion stemmed from a Gartner Hype Cycle for ITSM report that put SOAR in the ‘trough of disillusionment’.

The Gartner report focused on some of the shortcomings of SOAR-specific technology, and not necessarily SOAR as a concept. Even though there may be some valid issues with legacy SOAR platforms, every security team is still very enthusiastic about automation. Andrew, I would love to hear your view on the overall SOAR market.

Andrew: I've been very vocal against making SOAR obsolete. I think Gartner did that in favor of looking at automation through an AI perspective. I don't think that’s the right approach, at least from a maturity point of view. Maybe, in the future, it would would make sense.

Matt: As we speak with customers and folks who are evaluating what's out on the market, we hear that folks really struggle with:

Deploying legacy SOAR platforms

Operationalizing these platforms, which involves either learning a programming language or a domain-specific language

Finding the right people out of a small group of legacy SOAR experts to build the automations

Being forced to use pre-defined integrations instead of building their own custom automations

I’d love to know what you’re seeing out there.

Andrew: We also have a list of challenges associated with legacy SOAR. Pinning those challenges entirely on SOAR, I think, is a wrong approach. SOAR is one of the most exciting spaces, mainly because it's a natural place for AI to happen. You have your AI logic box, and you can define automation logic before and after. It's also a good place to build AI agents.

You still need to have a framework where you can define what’s going into the AI and what’s coming out. Implement your guardrails. Make sure you're not losing any data, outputs are sanitized, and so on.

SOAR is very much still valid today. It’s going to continue being valid, and it’s a natural gateway into implementing AI in your environment.

Andrew Green, Networking & Security Research Analyst, GigaOm

Types of SOAR vendor as outlined in the GigaOm Radar report for SOAR

Defining the different types of SOAR vendors

Matt: The world of SOAR and automation is fairly large. Andrew, as you evaluate all these different types of vendors, how are you categorizing them?

Andrew: Generally speaking, you have 3 types of SOAR vendors:

Pure-play vendors: Standalone SOAR solutions

Security players: Big companies that have acquired or developed SOAR in their wider portfolio

Cross-portfolio players: Vendors that offer solutions outside of security automation

As Tines has evolved, it’s gone from being a security player to a cross-portfolio player.

But I don’t want to spend too much time putting vendors into categories. Generally, if you’re going to buy a SOAR solution, it will be from one of those 3 types of vendors, which each have their own strengths and weaknesses.

AI in SOAR - use cases and limitations

Matt: Everyone is trying to figure out how to add AI to their platforms and solutions. I’ve seen five main use cases for AI in SOAR that address time-consuming tasks for humans:

Co-pilot/assistant for helping with tasks like crafting API calls

Initial triage for security incidents

Data summarization and extraction

Threat intelligence reporting

Documentation

AI really excels at these things. But we've also seen some of the more ambitious AI implementations reach the edges of where AI can be helpful.

If you look at how large language models (LLMs) are trained and constructed, this makes sense. If you have a public, well-structured data source, just about every LLM can figure out how to leverage that.

On the other hand, if you have if you have an internal, home-built, undocumented tool or platform, AI may just hallucinate an answer. So that is one limitation for these edge cases, where you don’t have a lot of data that’s publicly accessible, or you’re unable to connect your internal documentation source.

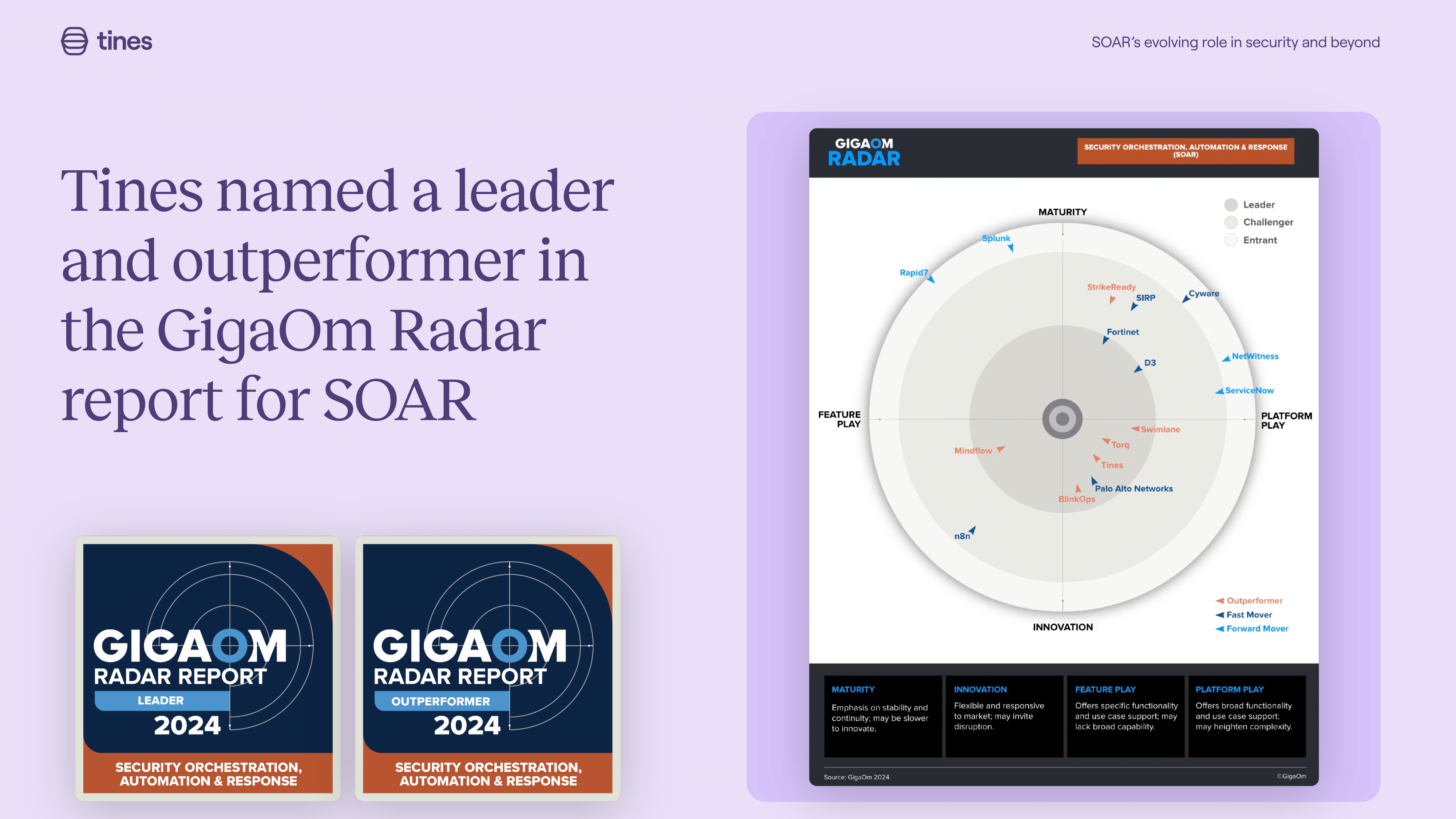

Tines's placement in the GigaOm Radar report for SOAR

The future of AI in SOAR

Matt: In general, I think AI is making life easier for the relevant use cases. Andrew, you’ve been looking at this market for a while now, and I’d love to get your thoughts on where things are going from here.

Andrew: I'll start off with an anecdote from last night. I'm looking to use a new RFP tool. I logged into it, but I didn't know how to create a new project. So I asked the assistant built into the platform, ‘How do I create a new project?’ The agent basically said, ‘It might be ‘new project’ or ‘create’ project - you’ll have to see.’

What’s the point of having the agent, if it’s not going to give you something specific? Proper implementation would’ve been something like, ‘I’ll create a new project for you.’ And then it would automatically create and open the new project so you can start populating it.

I think there are two main ways of looking at AI in security. One is AI for security - the other is security for AI.

Andrew Green, Networking & Security Research Analyst, GigaOm

In Tines, you’ve got 3 flavors of AI: workflow generation, incident response, and data analysis summarization.

Whenever you want to create a custom workflow but you don’t have time for it, you can just tell AI what you want to make, and it will create a workflow that will get you most of the way there. You’ll still have to do some manual work, but it will save you time.

When you’re responding to incidents, you can ask AI to identify the most severe incident from a set of alerts, or analyze a set of logs for anything anomalous.

AI, accountability, and decision-making

Andrew: AI is very good at analyzing big chunks of structured data that would be hard to go through and parse manually. My caveat with this is - and I’ve got experience with this - I don’t know how confident I would be putting my name on something that was generated by AI. I think this is a struggle that most people have gone through, even if they don’t say it.

If I’m going to report to my manager that I have determined this is a false positive based on the output of AI, and it turns out not to be a false positive, whose fault is it? Where does the accountability lie?

Just like with self-driving cars, when you have an accident, some people argue it should be the manufacturer’s fault. But realistically, it’s still going to be on the end user. I think this is still a gray area. It’s important to have processes in place about this before starting your AI journey.

What do you think, Matt?

Matt: I love AI as a first-draft tool. If I understand an alert and it’s just time-consuming for me to write up a summary, that feels like an ‘okay’ thing to put my name on. I can read the AI’s output and check if it’s hallucinated, but at the end of the day, I’m still the expert - it’s still my decision.

If I’m having the AI fully do the work for me, and maybe I don’t understand the output or I’m not able to vet the response or confirm whether it’s hallucinating, I’m uncomfortable with that.

Matt Muller, Field CISO, Tines

I’m curious - have you seen use cases for security teams where AI is acting as a decision-maker and that feels comfortable? Is the current generation of AI smart enough to act as a decision-maker, or is it still more of an assistant?

Andrew: I don’t know, and I don’t have the visibility into how people are using this day-to-day to comment. What I can say is that, even though it might give you the wrong information, and accountability lies with whoever the business decides it lies with, it might still be better than humans at processing hard-to-read data.

I wouldn’t discard it completely. It is a tool - it’s not autonomous right now at all. At the moment, efforts should be around making sure the output is as correct as humanly possible.

Once you get the human level, you can go with AI’s output with more confidence. It can be something as basic as using two different models. If the models don’t match, something’s clearly wrong, and somebody should investigate it. If you’re using two models and they do match, it’s more likely to be a true false-positive.

Matt: I really like how Anthropic describes their AI models in their documentation: ‘Treat Claude like a very smart coworker who knows absolutely nothing about your environment.’ In that case, you’d trust it to do some of the heavy lifting, but you’d still want some level of input and oversight. I think that sums up how we’ve tried to implement AI.

Tines offers the ability to integrate AI into a deterministic workflow so you get the best of both worlds. You input the data that’s sent to the AI model connected to your private instance. You can also set up steps to sanity-check the output to make sure the AI’s not hallucinating.

Matt Muller, Field CISO, Tines

We also offer Workbench, where you can directly interact with the AI via a chat-style model. But ultimately, the decision-making is up to you.

If you don't have a deep understanding of how this process works, it will be hard for you to say whether AI will do it as well or better than a human. We still have a lot of work ahead to ensure we’re using AI with the right guardrails in place.

It’s a great assistant, but I don’t think AI is ready to be completely let loose in any sort of autonomous way just yet.

Andrew: Aside from the things you can let AI loose on, there are less impactful tasks that you can outsource to AI right now - for example, finding the right documentation or highlighting an anomaly.

Comparing instances is another good example. AI is less likely to miss red flags, like two related incidents that share the same IP address, than a human operator. In general, AI is great for piecing together data that would be otherwise hard to find.

Learn how Tines helps teams break away from traditional SOAR