Data search, ID 3010 in the Automation Capability Matrix, is crucial for organizations to swiftly locate and analyze pertinent information. This makes it an essential tool for cybersecurity, IT operations, and compliance teams. Data search involves querying, filtering, and retrieving data from various sources such as SIEM platforms, databases, and other data storage solutions. This process allows organizations to discover valuable insights, identify anomalies, and make data-driven decisions.

Automated data search streamlines the search process, saving time and resources by reducing manual intervention and accelerating response times to security events. By employing automation in data searches, teams can immediately act on the data as soon as the search is completed, eliminating the risk of overlooked "coffee break queries."

Read on as we discuss how you can get started, the considerations to keep in mind, and which workflows data search will be most valuable for.

Approaching automated data search

One way to approach automated data search is by leveraging Security Information and Event Management (SIEM) systems. These systems are designed to collect and analyze security events from multiple sources. Data search can be employed to swiftly identify and investigate SIEM alerts, enabling security teams to address potential threats more efficiently and effectively. Upon receiving alerts from a SIEM, run additional queries for contextual information, such as impacted hosts.

Consider general-purpose use cases that you might encounter when searching data. Queries in SIEM platforms often include multiple variables. As an example, here's a Splunk query that searches for remote desktop connections from a local device:

index=zeek earliest=2023/04/20:00:00:00

| search dest_port=3389 src_ip=192.168.1.1Although this search is invaluable for understanding where remote desktop connections take place in your environment, its automation capabilities are limited. Instead, try substituting some query parameters with variables:

index=<<index_name>> earliest=<<date>>:00:00:00

| search dest_port=<<port>> src_ip=<<src_ip>>Now, you have a versatile query capable of searching any index, time range, service, and source IP address. Expand these queries to encompass even more parameters and operations!

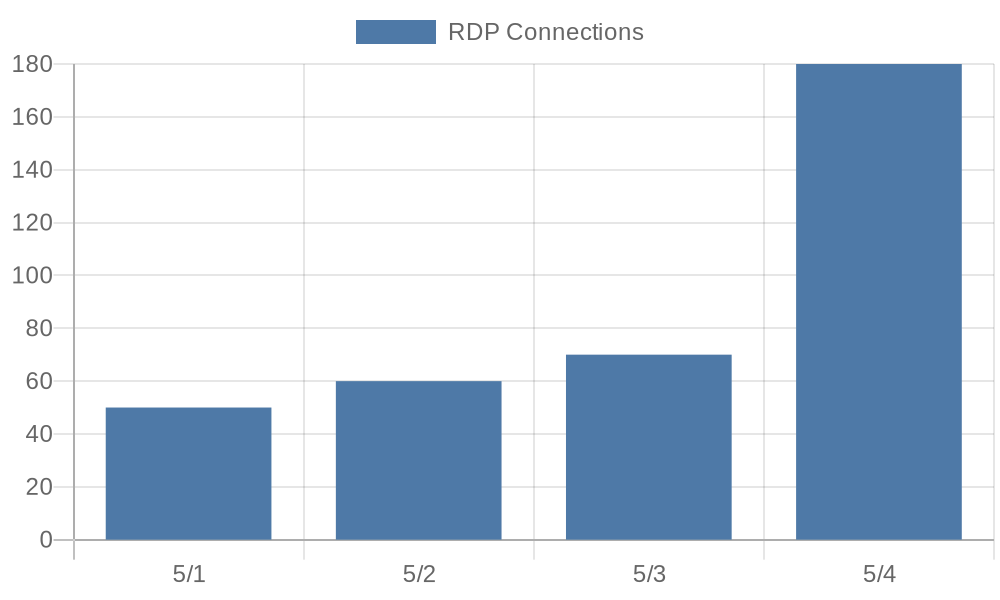

For instance, schedule statistical queries regularly and present the information in a spreadsheet or as an image. Splunk offers tstats and stats operations, while Elastic provides aggregations. Once the data is generated, tools like quickchart.io are highly effective for creating visualizations that can be incorporated into Slack messages or emails.

A chart consisting of data from a SIEM platform.

Finally, use scheduled data searches to generate new data tables with consistent counts of specific data points. A useful example is querying hosts that fail to meet certain health requirements and recording them. Over time, you can analyze whether this number is increasing or decreasing and identify which hosts are not being addressed.

Implementation considerations

Various SIEM platforms and database technologies employ different methods for accessing data through APIs. Since many searches can take a considerable amount of time to retrieve complete data sets, it's important to keep certain techniques in mind when implementing data search.

Search jobs

Many SIEM platforms use the concept of 'jobs' when executing queries. This means that the platform receives the query, assigns a job number, and schedules a worker to perform the job when available instead of instantly returning the results. The job number can then be used to query for data once the job is complete. Splunk and Sumo Logic are examples of platforms that utilize this approach. Other platforms, like Google BigQuery, only create a job if it detects that the query could take longer than a short period of time. It's crucial to understand how your data storage returns data to obtain the most accurate results.

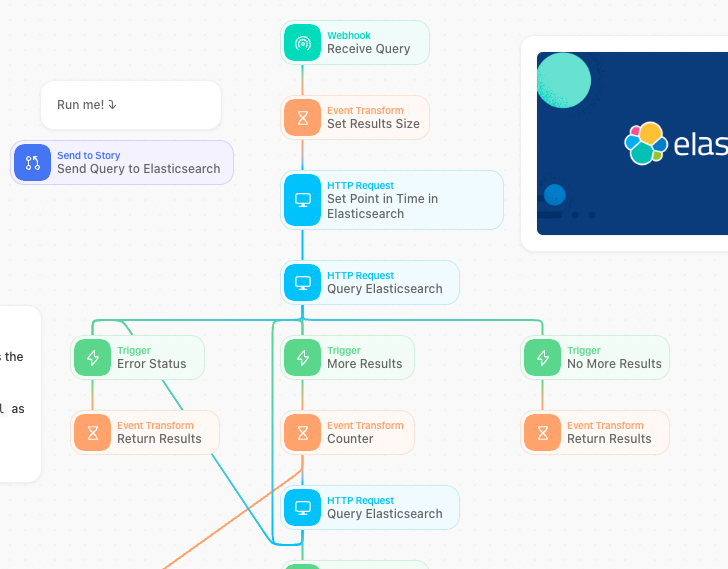

Paginated results

When querying large amounts of data, the full results may exceed what your computer can handle at once! To address this, many data platforms implement pagination, returning only a subset of results along with a method to retrieve more results. Look for terms such as 'offset', 'page', 'scroll', or 'next' to identify the method a data platform might use to return a subset of results. Failing to consider pagination in your implementation could result in missing a significant portion of the data!

An example Tines story to retrieve multiple pages of results.

Handling long queries and timeouts

Some queries may take longer to complete and could even time out. In these cases, it's good practice to recognize when a query has been running for an excessive amount of time and provide a link to the job or query status. This allows someone to manually retrieve the results or intervene to stop the long-running process.

Where to implement data search

Data search is valuable in numerous security automation workflows. It is often best to implement data search in a way that can be reused across multiple workflows. By designing your automation to allow easy updates to different queries, tables/indexes, time frames, and other variables, you can ensure seamless data search capabilities across various contexts.

Ad hoc searching

The simplest and most common way to implement data search is by enabling ad hoc searching without requiring users to log in to a SIEM platform or connect to a data store. By creating accessible search interfaces in tools like Slack, you can extend access to specific data sets for other teams in a systematic and secure manner.

Phishing response

Incorporating data search into various stages of your phishing response automation can add significant value. For example, search logs to identify who else has received emails with similar subject lines or senders. Investigate any hosts executing binaries received as file attachments. Examine web proxy logs for POST requests containing passwords to URLs and domains found in suspicious emails.

Searching for historical events

When new vulnerabilities arise, it's not unusual for indicators of exploitation to already exist within a given environment. Data points such as file hashes, command line arguments, or client requests to a web server can expose past actions that were only subsequently recognized as malicious. Leverage data search to address new indicators of compromise that stem from security research and vulnerability disclosures, enabling you to evaluate the potential impact on your environment.

By using automation in data searches, teams can streamline the process and save both time and resources, ultimately enhancing security and efficiency across multiple workflows.