At Tines, our mission is to power the world's most important workflows, and AI has recently become a huge part of that story. We currently have two AI-powered capabilities that enable teams to work faster, reduce barriers to entry, and spend more time on the fulfilling work that attracted them to their professions in the first place. But this is only possible because they trust that these features are private and secure by design.

Today, I'm going to share how we built AI in Tines, specifically how we developed an approach to security and privacy that's unique within the workflow automation market. Let's start with how we at Tines think about AI.

The relationship between automation and AI

I hold the view that automation and AI are synonyms. Sure, automation has a slightly historical connotation and AI has a futuristic one, but they're both focused on the same result - if we can offload more tasks to computers, especially the boring, manual, repetitive work, we can free people up to do creative work, and, ultimately, live happier, more fulfilling lives.

Based on this idea, we as an automation company now think about ourselves as an AI company. Along with the rest of the product team at Tines, I’ve been thinking about this a lot. We’ve been doing tons of building, we've been prototyping, fleshing out AI features, and seeing what works.

And what you very quickly discover as somebody who builds AI features is that impressive demos are unbelievably easy to create. This is the most demo-friendly software innovation there's ever been.

At the same time, actually useful features - rigorous, reliable, dependable features - are kind of hard to build. There's a gulf between the demo and the reality.

Demo-worthy Vs deployable

If you’ve been paying attention to what’s going on with AI, you’ll know that a lot of AI features that vendors are shipping today are demoware, they're actually not very helpful for the user. So I’ve started asking myself these questions whenever I see a vendor making claims about AI.

Is it better than ChatGPT in a new tab?

Does it work for my real use case?

How slow is it… honestly?

How many false positives are there?

In a lot of cases, this is the reality that you encounter when you start looking at AI features. And I think the reason that companies are shipping what I believe are underwhelming AI features is because they feel pressure to.

We at Tines really wanted to resist this, and so we actually didn't ship 50 or 60 or 70 prototypes that had good demos. This is one of them.

This is a prototype in which we were reimagined the Tines interface now with a language model that you could chat with and would help you execute things. But it just didn't work. It didn’t work well enough for what our customers use Tines for - powering their most important workflows. So we moved on.

Even when you eventually find an AI feature or an approach that works, you instantly hit this problem, which is that you're naturally going to be giving the AI access to some data. And you have to now think about the new privacy and security concerns with doing so.

Questions to ask when developing AI tools

Are we going to be passing data around the internet?

Are we doing AI via API?

Are there regulation and compliance ramifications?

Is this going to cross legal region boundaries?

Is there any training happening on our customer data?

Who's logging metadata or data?

Do we have to trust a new vendor or a subprocessor?

Product teams, when they find a feature that works and they face all these challenges, they tend to start mitigating. We spent a lot of effort trying to mitigate these risks.

When you’re mitigating, you do things like adding governance features, and adding really complicated audit logging, all these new screens and applications, and tons of new docs that try and explain things over and over again to calm customers down. And I will say that some of these constraints actually lead to really creative improvements.

But there is an inherent trade-off. The less data you feed the LLM, the worse the feature. So there's a horrible trade-off between security and privacy and how good the feature is, and that's because language models are fueled by context. You have to give them context of the problem you're trying to solve and that naturally involves data.

The good news is that there actually is a better way.

The ideal solution for powering products with LLMs

The ideal solution contains the following attributes:

Something that's inside your infrastructure that doesn't talk to the internet and can't be reached on the public internet

Something that can access the very best language models out there, as well as great open-source language models

Something with strong security guarantees like no logging or training

Something that's easy to set up and to scale almost infinitely.

The solution to these problems is available today.

Building our first AI feature - automatic mode

Let’s talk about the first feature that we shipped that actually worked. We call this automatic mode.

When you're building a workflow, you often have to transform data. It could be that you've got some data coming in from a SIEM in a SIEM alert and you need to normalize it to a schema. And that kind of data transformation was actually our biggest usability issue in Tines.

We wondered if we could get the language model to write code for you so even if you didn't understand how to write code, you could take advantage of the language model and have it fill in the blanks.

This actually worked wonderfully. We built it out using a leading foundational model provider, using their API, so this is AI via API. We ended up with something pretty nice where the AI writes the code, and shows you the output. Here it is helping the user perform a simple action, analyze some weather forecast data.

We got super excited and we wanted to ship it. And we decided to add this foundational model provider to our list of subprocessors. This is something we have to do as a product team to inform our customers of who might be managing their data.

We actually hadn't even shipped this feature yet and we scared the life out of our customers. Their reactions were pretty strong - “Oh no are you what are you doing with my important data? Are you training on it? What's going on? We don't want AI!”

And this was interesting because we had built this feature with security and privacy in mind, it only needed a tiny bit of data to build what you what you needed, and then it would run the code and there was no more AI. But just the mention of a new AI provider was scary to our customers and I bet scary to many security practitioners who care deeply about this stuff.

So we had a bit of a quandary. We're considered applying all of these mitigations I mentioned earlier. But a couple of weeks after we reached that point, two things changed:

Anthropic, one of the leading AI Labs, released their Claude language model family.

Claude 3 Sonnet landed on Amazon Bedrock.

The fact that Claude 3 Sonnet was available the same day really told us that Amazon were committed to moving fast with this technology. We plugged in Claude 3 Sonnet and it worked. It worked pretty much perfectly for our feature.

Leveraging Amazon Bedrock and AWS PrivateLink for AI in Tines

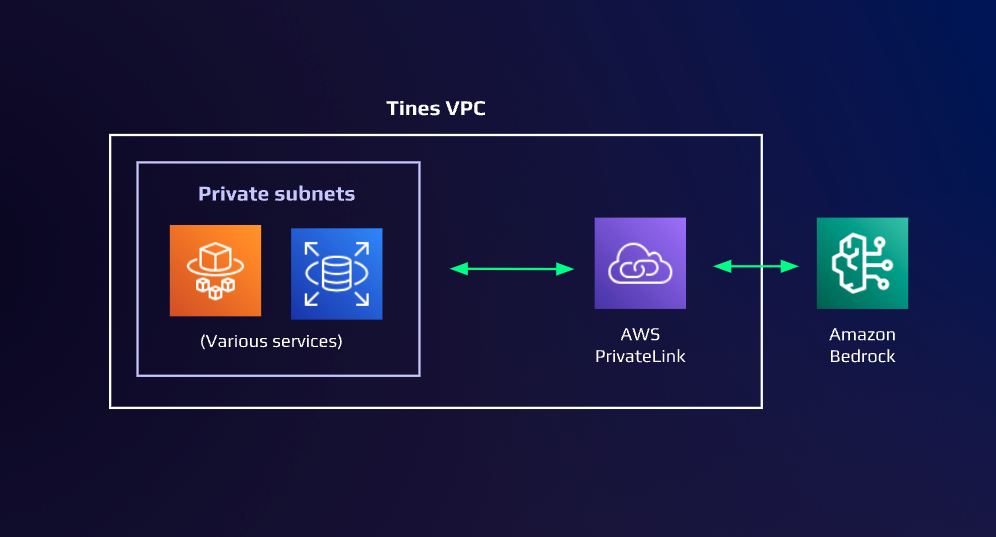

Above you can see a very abridged version of the architecture we're using. You can see we've got our general AWS Services running in subnets on our VPC, and then we're using AWS PrivateLink to connect to Bedrock, and this gives us a bunch of the properties here on the left. No training and logging because Amazon are taking these things very seriously, no internet travel thanks to PrivateLink, and we already trust AWS with all of our data, so there's no change there.

Selecting the correct model for the task

Next, we discovered a new problem - Claude 3 Sonnet was really smart, but a little bit too slow. And actually the user interface of this feature required really snappy interactions.

And then, 10 days later, the Amazon team shipped Haiku on Bedrock. This is a slight variant of the same language model, and it’s a bit smaller but a lot faster.

And this just kept happening. Every time we reached an edge case with Bedrock, the team were already ahead of us and shipping an answer, seemingly the next day. And again that really told us that Bedrock was a significant area of investment for Amazon and something that we could bet on.

So let's think back to that ideal solution that we had been craving. We really have met all of those criteria.

✔️ within your infrastructure

✔️ doesn’t talk to/on the Internet

✔️ can access the very best LLMs

✔️ can also use open-source LLMs

✔️ guarantees no logging

✔️ guarantees no training

✔️ trivial to set up and scale

We had a properly private and secure version of the foundation we needed to ship this feature to our customers and we did that. We didn't need to send that GDPR notification again, because there was no new subprocessor.

We were taking advantage of all these upsides and then we reflected and we realized something. We built this feature thinking that we had to be very careful about security and privacy, thinking that we would need to minimize data, but we've now come up with this set of parameters that makes this just like any other part of our application.

What kind of AI features could we build if we didn't need to worry about the LLM having access to our customers’ data?

And that's the real payoff here. It's that when you solve for security and privacy, you can build much more ambitious and much more performant AI products.

Our second AI feature - the AI action

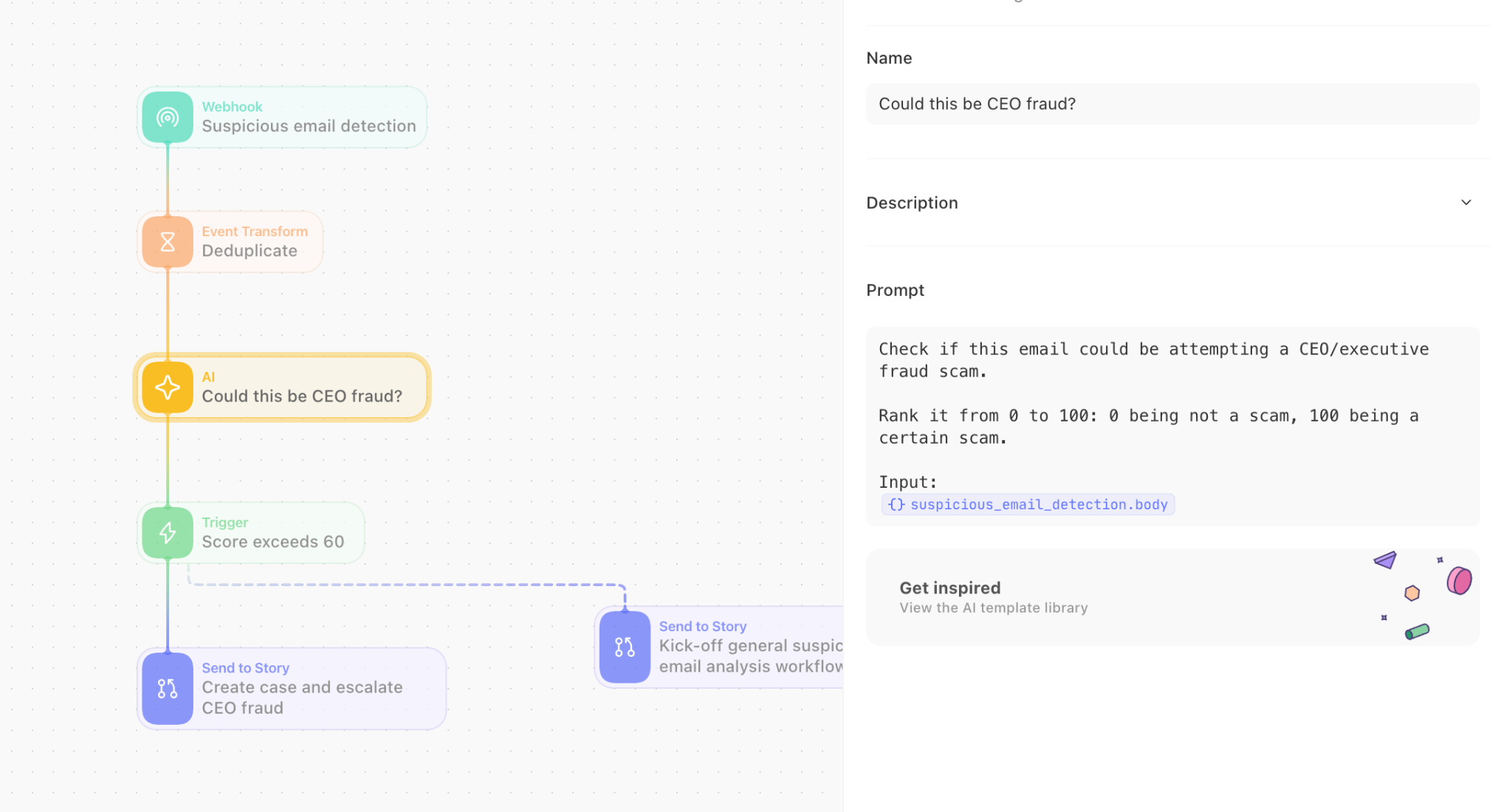

Tines has always had seven building blocks, seven actions, they're the steps in the workflow. So now that we have a secure foundation for AI, how do we get our customers to use it everywhere in their workflows? Simple answer - it was time for our eighth action.

So just a couple weeks ago, we released the AI action and this is much less concerned about the privacy boundary, because nothing is leaving our infrastructure.

Being able to use full, possibly very sensitive data as input is a massive game changer for almost every single security workflow out there.

I'll give you one tiny example. This is a simplified version of a suspicious email analysis workflow. We want to find out is this email possibly CEO fraud, is somebody trying to get us to wire money to an attacker's account? And using that prompt, the Claude language model will get this right pretty much every time with exceptional precision, out of the box.

Our customers are now able to use this in all sorts of places in their workflows without concern for security or privacy.

A simplified version of a suspicious email analysis workflow using the AI action

Three pieces of advice for security practitioners leveraging AI

I’ll leave you with three pieces of advice.

Let the buyer beware. A lot of vendors are trying to sell AI features that just genuinely do not work. Be thorough when you’re evaluating these tools.

If you're using AI via an API, I would encourage you to rethink it. I think it's an insecure approach, fundamentally, and there are better alternatives out there.

Mitigating security and privacy in AI is the wrong question. It shouldn’t be about mitigating security and privacy problems. It's about finding tools that remove those problems in the first place.

I firmly believe that AI in Tines provides the ideal solution in the market today. Learn more about our new AI features and see example workflows, and find out what our customers are building with AI in Tines.

Start building with AI in Tines today by signing up for our always-free Community Edition.